Publicly Sharing Dataset:

1. CHN6-CUG Road Dataset:Road datasets of new large-scale satellite remote sensing images for representative cities in China

2. SOS: Deep-SAR Oil Spill Dataset

CHN6-CUG Road Dataset:Road datasets of new large-scale satellite remote sensing images for representative cities in China

Abstract

CHN6-CUG Road Dataset was produced and shared by the team of Qiqi Zhu from URSmart Group of China University of Geosciences, Wuhan. It is the first new large-scale satellite remote sensing image road data set of representative cities in China. This dataset is a pixel level high-resolution satellite image with artificial label and 6 representative cities in China are selected. The research was published in ISPRS Journal of Photogrammetry and Remote Sensing.

1. CHN6-CUG Road Dataset

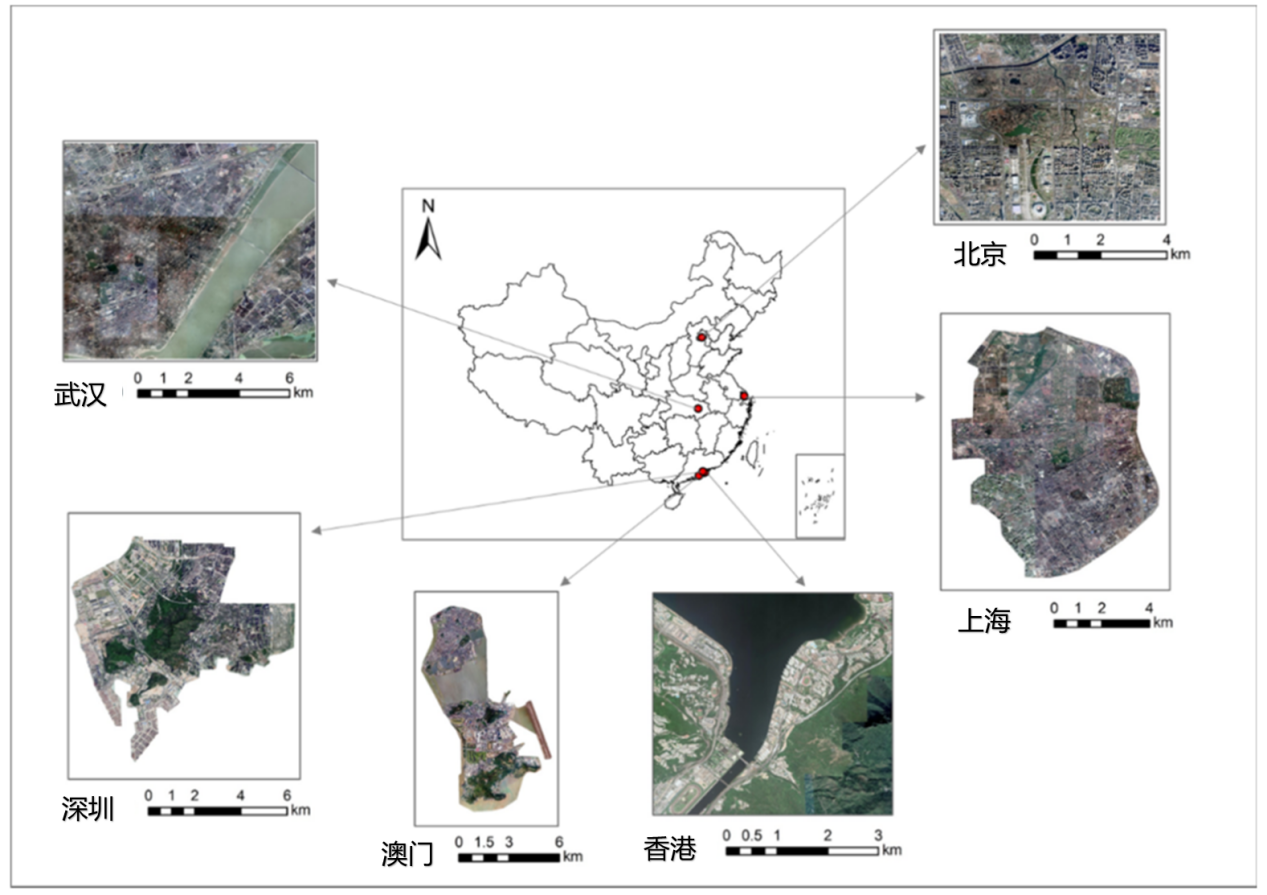

CHN6-CUG Road Dataset is a new large-scale satellite image data set of representative cities in China. Its remote sensing image base map is from Google earth. Six cities with different levels of urbanization, city size, development degree, urban structure and history and culture are selected, including the Chaoyang area of Beijing, the Yangpu District of Shanghai, Wuhan city center, the Nanshan area of Shenzhen, the Shatin area of Hong Kong, and Macao. The study area is shown in Figure 1. A marked road consists of both covered and uncovered roads, depending on the degree of road coverage. According to the physical point of view of geographical factors, marked roads include railways, highways, urban roads and rural roads, etc.

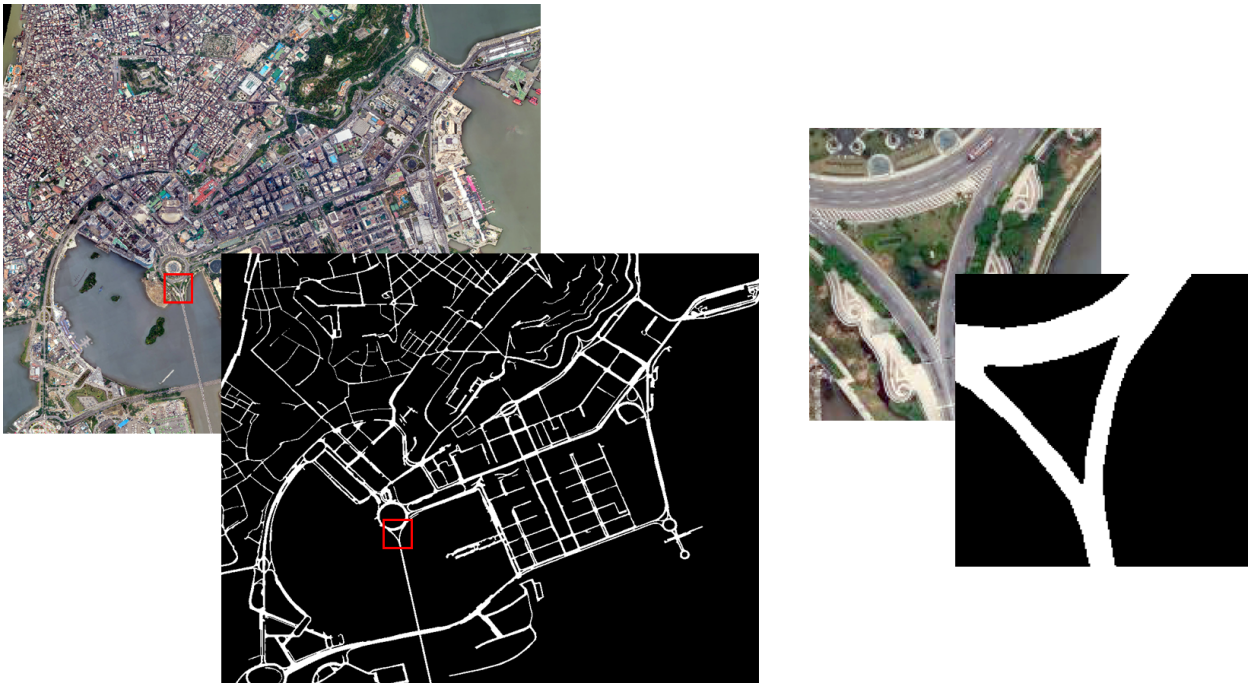

CHN6-CUG contains 4511 labeled images of 512×512 size, divided into 3608 for model training and 903 for testing and result evaluation, with a resolution of 50 cm/pixel. The original data set is in the format of .jpg and the road marking map is in the format of .png. The compressed data volume is 175MB.

Fig. 1. Overview of the study areas in CHN6-CUG Roads Dataset.

Fig. 2. CHN6-CUG road data set sample

2. Download

CHN6-CUG Road Dataset tree is as follows:

CHN6-CUG

└───train

│ └───gt

│ └───images

└───val

│ └───gt

│ └───images

3. Experiment

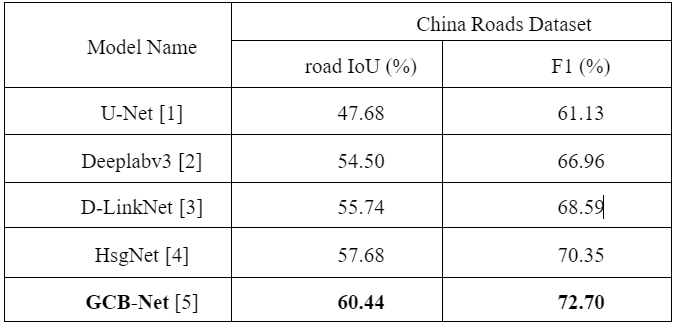

The extraction effect of typical deep learning algorithms in the field of road extraction on CHN6-CUG Road Dataset is shown in Table 1.

Table. 1. Comparison of different road network extraction methods on CHN6-CUG Roads Dataset.

Reference

1. Ronneberger, O., Fischer, P., & Brox, T. (2015, October). U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention (pp. 234-241). Springer, Cham.

2. Chen, L. C., Papandreou, G., Schroff, F., & Adam, H. (2017). Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587.

3. Zhou, L., Zhang, C., Wu, M., 2018. D-LinkNet: LinkNet With Pre-trained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 182–186.

4. Xie, Y., Miao, F., Zhou, K., & Peng, J. (2019). HsgNet: A Road Extraction Network Based on Global Perception of High-Order Spatial Information. ISPRS International Journal of Geo-Information, 8(12), 571.

5. Zhu, Q., Zhang, Y., Wang, L., Zhong, Y., Guan, Q., Lu, X., ... & Li, D. (2021). A Global Context-aware and Batch-independent Network for road extraction from VHR satellite imagery. ISPRS Journal of Photogrammetry and Remote Sensing, 175, 353-365.

6. Demir, I., Koperski, K., Lindenbaum, D., Pang, G., Huang, J., Basu, S., Hughes, F., Tuia, D., Raska, R., 2018. Deepglobe 2018: A challenge to parse the earth through satellite images. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 172–17209.

7. Van Etten, A., Lindenbaum, D., Bacastow, T. M., 2018. Spacenet: A remote sensing dataset and challenge series, arXiv preprint arXiv: 1807.01232.

8. Chen, Y., Kalantidis, Y., Li, J., Yan, S., Feng, J., 2018. A^ 2-nets: Double attention networks. In: Advances in neural information processing systems, pp. 352–361.

9. Zhu, Q., Zhong, Y., Zhang, L., Li, D., 2018. Adaptive deep sparse semantic modeling framework for high spatial resolution image scene classification. IEEE Trans. Geosci. Remote Sens. 56 (10), 6180–6195.

10. Lu, X., Zhong, Y., Zheng, Z., Liu, Y., Zhao, J., Ma, A., Yang, J., 2019. Multi-scale and multi-task deep learning framework for automatic road extraction. IEEE Trans. Geosci. Remote Sens. 57 (11), 9362–9377.

11. Tao, C., Qi, J., Li, Y., Wang, H., Li, H., 2019. Spatial information inference net: Road extraction using road-specific contextual information. ISPRS J. Photogramm. Remote Sens. 158, 155–166.

12. Zhu, Q., Li, Z., Zhang, Y., Guan, Q., 2020. Building Extraction from High Spatial Resolution Remote Sensing Images via Multiscale-Aware and Segmentation-Prior Conditional Random Fields. Remote Sens. 12 (23), 3983.

4. Copyright

The copyright belongs to URSmart Group, China University of Geosciences. The CHN6-CUG Roads Dataset can be used for academic purposes only and need to cite the following paper, but any commercial use is prohibited. Otherwise, URSmart of China University of Geosciences,Wuhan reserves the right to pursue legal responsibility.

Please cite this paper if you use this dataset:

Q. Zhu, Y. Zhang, L. Wang, et. al., "A Global Context-aware and Batch-independent Network for road extraction from VHR satellite imagery," ISPRS Journal of Photogrammetry and Remote Sensing, 2021,175: 353-365. DOI: 10.1016/j.isprsjprs.2021.03.016.

5. Contact

If you have any the problem or feedback in using CHN6-CUG Roads Dataset, please contact:

Miss. Yanan Zhang:yanan.zhang@cug.edu.cn

Prof. Qiqi Zhu: zhuqq@cug.edu.cn

SOS: Deep-SAR Oil Spill Dataset

Abstract

Deep-SAR Oil Spill (SOS) dataset was produced and shared by URSmart Group of China University of Geosciences, Wuhan. It is constructed to fully advance the oil spill detection task. SOS dataset provides a benchmark resource for the development of state-of-the-art algorithms for SAR image oil spill detection or other related tasks.

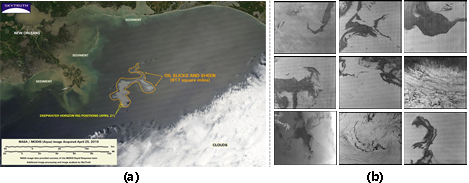

1. Deep-SAR Oil Spill (SOS) dataset

The SOS dataset has two study areas, the Gulf of Mexico oil spill area and the Persian Gulf oil spill area. Fig. 1 shows the location of the oil spill in the Gulf of Mexico and a typical subset of the selected ALOS satellite images. The location of the oil spill in the Persian Gulf and a typical subset of the selected Sentinel-1A satellite images are given in Fig. 2. For this reason, data enhancement techniques were used to extend the original dataset. Cropping, rotating, adding noise and other operations are taken on the original grayscale maps. Finally, a total of 3101 images from the Mexico oil spill area were used for training and 776 images were used for testing. A total of 3354 images from the Persian Gulf oil spill area were used for training and 839 images were used for testing.

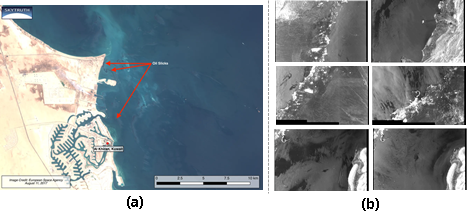

Fig.1. The location of the oil spill in the Gulf of Mexico. (a) NASA/MODIS satellite image taken April 25 showing oil slicks from Deepwater Horizon disaster. (Source: John Amos 2010 [1]) (b) Typical subset of the selected ALOS satellite images.

Fig.2. The location of the oil spill in the Persian Gulf. (a) Sentinel-2A multispectral satellite image showing oil slick making landfall along Kuwait’s coast near Al Khiran on August 11, 2017. (Source: John Amos 2017 [2]). (b) Typical subset of the selected Sentinel-1A satellite images.

Reference

[1] “Gulf Oil Spill Covers 817 Square Miles,” SkyTruth, Apr. 26, 2010. [Online]. Available: https://skytruth.org/2010/04/gulf-oil-spill-covers-817-square-miles/ (accessed Mar. 22, 2021).

[2] “Satellite Imagery Reveals Scope of Last Week’s Oil Spill in Kuwait,” SkyTruth, Aug. 15, 2017. [Online]. Available: https://skytruth.org/2017/08/satellite-imagery-reveals-scope-of-last-weeks-massive-oil-spill-in-kuwait/ (accessed Mar. 19, 2021).

2. Download

SOS dataset tree is as follows:

SOS

└───train

│ └───gt

│ └───images

└───val

│ └───gt

│ └───images

3. Copyright

The copyright belongs to URSmart Group, China University of Geosciences. The SOS Dataset can be used for academic purposes only and need to cite the following paper, but any commercial use is prohibited. Otherwise, URSmart of China University of Geosciences,Wuhan reserves the right to pursue legal responsibility.

Please cite this paper if you use this dataset:

Q. Zhu, Y. Zhang, Z. Li, X. Yan, Q. Guan, Y. Zhong, L. Zhang, and D. Li, “Oil Spill Contextual and Boundary-Supervised Detection Network Based on Marine SAR Images,” IEEE Transactions on Geoscience and Remote Sensing, 2021.DOI: 10.1109/TGRS.2021.3115492

4. Contact

If you have any the problem or feedback in using SOS Dataset, please contact:

Miss. Yanan Zhang:yanan.zhang@cug.edu.cn

Prof. Qiqi Zhu: zhuqq@cug.edu.cn